[Database] Bustub Buffer Pool Manager Implementation

Implement a buffer pool manager for a DBMS, from scratch, in C++.

Per CMU policy, Bustub solution should not be made public. Hence, only code snippets and logic are shown here. If you need to see the code, email me: yy4108@nyu.edu.

I am on my train to Qingdao right now, to see a beautiful girl. I am not so sure about how I feel, excited, but at the same time nervous. It is even freezing in Shanghai recently, let alone Qingdao. It is my first time, travelling so far, just to see someone.

I want to be productive on a train, but it is not easy. Writing something about buffer pool manager will probably calm me down.

We will follow the guides and instruction of Andy Pavlo, and his CMU 15-445 course, to implement a buffer pool manager for a database management system, in C++.

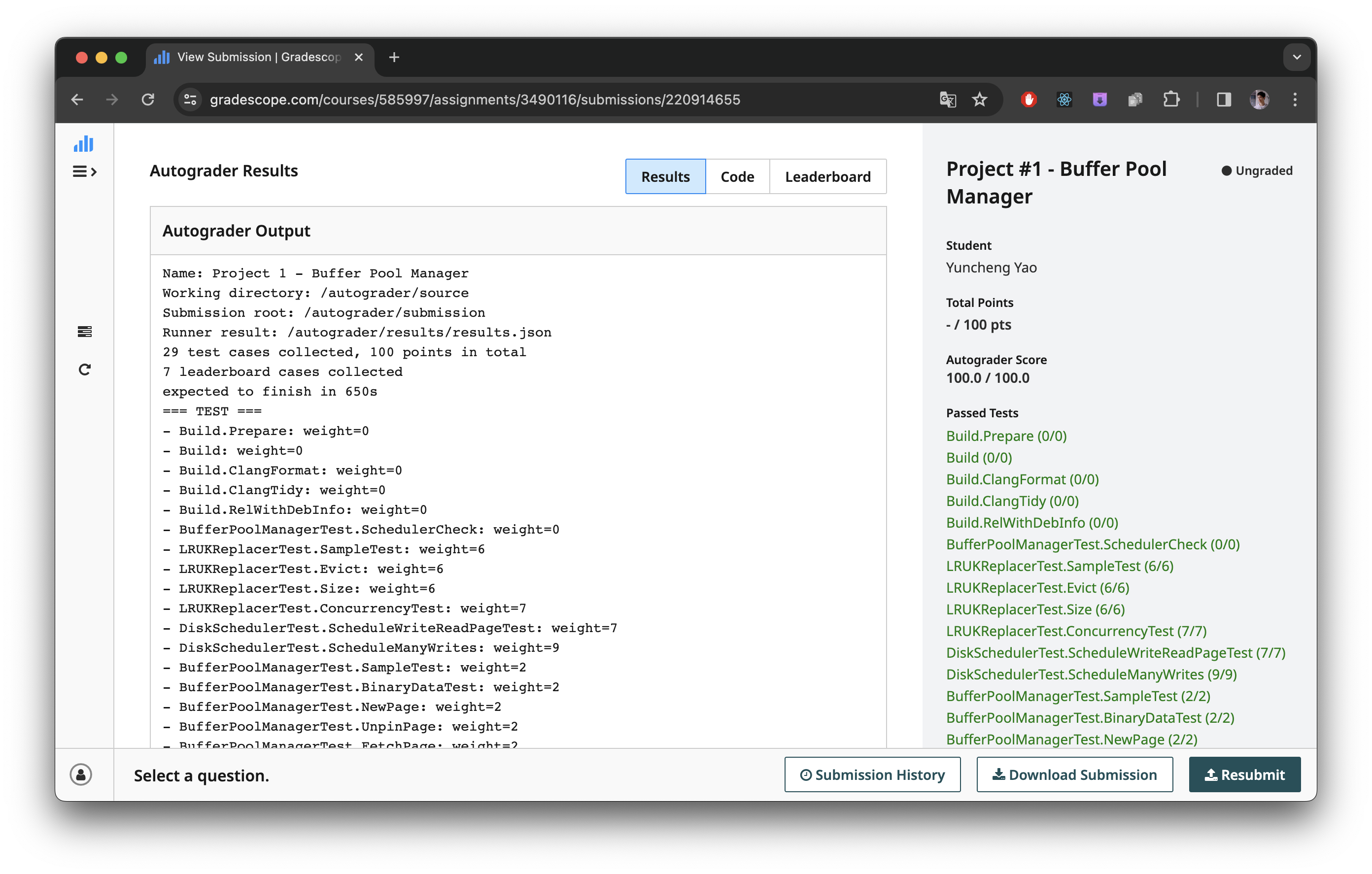

First, let me prove myself with a Gradescoppe screenshot.

You can indeed be convinced that I know what I will be talking about.

LRU-K Replacement Policy

Implementing an eviction policy is always the first step in any buffer pool manager implementation. The reason being that we always need to figure out a way to decide which page (frame) to evict when the buffer pool is full.

Per the instruction of CMU, here we will be implementing an LRU-K policy. First let’s take a look at what is expected in an LRU replacer.

The LRU-K algorithm evicts a frame whose backward k-distance is maximum of all frames in the replacer. Backward k-distance is computed as the difference in time between current timestamp and the timestamp of kth previous access. A frame with fewer than k historical accesses is given +inf as its backward k-distance. When multiple frames have +inf backward k-distance, the replacer evicts the frame with the earliest overall timestamp (i.e., the frame whose least-recent recorded access is the overall least recent access, overall, out of all frames).

The maximum size for the

LRUKReplaceris the same as the size of the buffer pool since it contains placeholders for all of the frames in theBufferPoolManager. However, at any given moment, not all the frames in the replacer are considered to be evictable. The size ofLRUKReplaceris represented by the number of evictable frames. TheLRUKReplaceris initialized to have no frames in it. Then, only when a frame is marked as evictable, replacer’s size will increase.

With the basic idea in mind, let’s discuss how to implement in detail.

1 | LRUKReplacer::LRUKReplacer(size_t num_frames, size_t k) : replacer_size_(num_frames), k_(k) {} |

First, implement the constructor. num_frames is the number of total frames available, and the k_ here should be the number of evictable frames.

The main logic of LRU-K is in the Evict function.

1 | auto LRUKReplacer::Evict(frame_id_t *frame_id) -> bool { |

The logic is simple:

- check all nodes that are marked evictable by the replacer.

- If it has less than k elements, then its k distance is set to infinite (the most likely to be replaced).

- If we have multiple +inf distance nodes, then fall back to the original LRU policy. Just pick the oldest among these +inf nodes. (This is the purpose that found_infinite is servering)

- Otherwise, if all evictable pages have more than k access records, follow the LRU-K policy, and pick the oldest.

RecordAccess function is also important. It should be called anytime we touch a frame (read/write it from the buffer pool manager).

1 | void LRUKReplacer::RecordAccess(frame_id_t frame_id, [[maybe_unused]] AccessType access_type) { |

- fist check if the page that you are trying to access is actually legal. If not, raise an exception.

- If the LRU node is already in the Hash Map, just update the node history (evict the oldest if there is more than K accesses)

- if not found, init a new node element in the hash map.

SetEvictable is simple, you just need to check if the frame_id you are setting is legal and can actually be found in the hash map. Then you set evictablity based on the parameter. Finally, remember to update the current_size_, which represents the number of evictable pages.

1 | void LRUKReplacer::SetEvictable(frame_id_t frame_id, bool set_evictable) { |

The last componenet of an LRU is a Remove function, which removes everything from the replacer related with a certain page.

1 | oid LRUKReplacer::Remove(frame_id_t frame_id) { |

- check legal

- find the actual element

- update the hash map

Disk Scheduler and Manager

The disk scheduler can be used by other components (in this case, your

BufferPoolManagerin Task #3) to queue disk requests, represented by aDiskRequeststruct (already defined insrc/include/storage/disk/disk_scheduler.h). The disk scheduler will maintain a background worker thread which is responsible for processing scheduled requests.

The disk scheduler will utilize a shared queue to schedule and process the DiskRequests. One thread will add a request to the queue, and the disk scheduler’s background worker will process the queued requests. We have provided a

Channelclass insrc/include/common/channel.hto facilitate the safe sharing of data between threads, but feel free to use your own implementation if you find it necessary.

This is actually quite easy, and I think the main challenge is to familarize yourself with the Promise and Future in C++ 17. (Cool stuff, but reminds me deeply of the trauma I had with JavaScript’s Promise and Future).

The main challenge is to implement a thread safe channel construct, which has been provided already. The rest is very straightforward, as long as you are familiar with the move semantics.

1 | void DiskScheduler::Schedule(DiskRequest r) { request_queue_.Put(std::make_optional(std::move(r))); } |

1 | struct DiskRequest { |

Since the DiskRequest contains a Promise object, it cannot be copied, but only moved to the shared queue. After the thread has finished its job, it sets the flag in the callback Promise.

Buffer Pool Manager

This is the main challenge. It is intricate, and delicate, and very hard to debug. It took me several hours to finally get it right (and I an still not sure which part that I touched made things come to the right track).

1 | auto BufferPoolManager::NewPage(page_id_t *page_id) -> Page * { |

This function allocates a new page in the buffer pool manager. The logic is that:

- If there is a page in the free list, allocate directly.

- otherwise we need to evict a oage from the LRU replacer (Do remember to detach the original evicted page, delete it from page table, and remove it from the replacer, otherwise the LRU-K policy will not be functioning correctly)

- don’t forget that if the page is dirty, we need to flush it to disk.

- initialize the new page with a pin count, a dirty flag, a new page id(unique to this sepcific buffer pool manager), and touch the page with the recordAccess.

1 | auto BufferPoolManager::FetchPage(page_id_t page_id, [[maybe_unused]] AccessType access_type) -> Page * { |

FetchPage implementation shares exactly the same logic as the NewPage, the only difference being that after fetching a free page, we need to also fetch the page content from the disk, using the Promise and future. Here we only care about correctness, so wait until IO is finished.

1 | auto BufferPoolManager::UnpinPage(page_id_t page_id, bool is_dirty, [[maybe_unused]] AccessType access_type) -> bool { |

The thing that I got wrong with this unpin funciton initially is that here we are not writing to disk, we are only unpinning. To achieve good performance, we want to delay IO as much as possible, instead of writing to the disk everyting we unpins.

Flush page is easy, just write to disk, regardless of the dirty bit. The dirty bit will be checked by upper layers. But we do need to clear the dirty flag after writing to disk.

1 | auto BufferPoolManager::FlushPage(page_id_t page_id) -> bool { |

DeletePage

1 | auto BufferPoolManager::DeletePage(page_id_t page_id) -> bool { |

DeletePage is also easy, just remember to

- not delete if the page is still pinned.

- flush the page if it is dirty.

- clear all page table and LRU replacer entry.

- add the page back to the freelist.

Ending Remark

It looks easy, but the logic can be complicated, a little. And it is very hard to debug. Nevertheless, implementing a buffer pool manager is important for me to understand how a disk backed dbms really worked internally. A good lesson to learn, I would say.

[Database] Bustub Buffer Pool Manager Implementation

http://peteryaonyu.github.io/2023/12/18/Database-Bustub-Buffer-Pool-Manager-Implementation/